『Pytorch笔记』使用netron工具可视化Pytorch模型!

程序员文章站

2022-06-05 22:02:05

...

| 使用netron工具可视化Pytorch模型! |

Netron是微软小哥lutzroeder的一个广受好评的开源项目,地址:https://github.com/lutzroeder/Netro

Netron supports ONNX (.onnx, .pb, .pbtxt), Keras (.h5, .keras), Core ML (.mlmodel), Caffe (.caffemodel, .prototxt), Caffe2 (predict_net.pb, predict_net.pbtxt), MXNet (.model, -symbol.json), NCNN (.param) and TensorFlow Lite (.tflite).

- 安装

netron

pip install netron

1. 实例1

- 测试代码: 由于不支持默认的

pytorch模型格式(.pth),因此需要存为onnx,庆幸pytorch支持!

import math

import torch

import torch.nn as nn

from torch.autograd import Variable

import netron ###################

import torch.onnx ###################

__all__ = ['vgg']

defaultcfg = {

11 : [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512],

13 : [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512],

16 : [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512],

19 : [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512],

}

class vgg(nn.Module):

def __init__(self, dataset='cifar10', depth=19, init_weights=True, cfg=None):

super(vgg, self).__init__()

if cfg is None:

cfg = defaultcfg[depth]

self.feature = self.make_layers(cfg, True)

if dataset == 'cifar10':

num_classes = 10

elif dataset == 'cifar100':

num_classes = 100

self.classifier = nn.Linear(cfg[-1], num_classes)

if init_weights:

self._initialize_weights()

def make_layers(self, cfg, batch_norm=False):

layers = []

in_channels = 3

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1, bias=False)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

def forward(self, x):

x = self.feature(x)

x = nn.AvgPool2d(2)(x)

x = x.view(x.size(0), -1)

y = self.classifier(x)

return y

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(0.5)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

m.weight.data.normal_(0, 0.01)

m.bias.data.zero_()

if __name__ == '__main__':

net = vgg()

x = Variable(torch.FloatTensor(16, 3, 40, 40))

y = net(x)

print(y.data.shape)

onnx_path = "onnx_model_name.onnx"

torch.onnx.export(net, x, onnx_path)

netron.start(onnx_path)

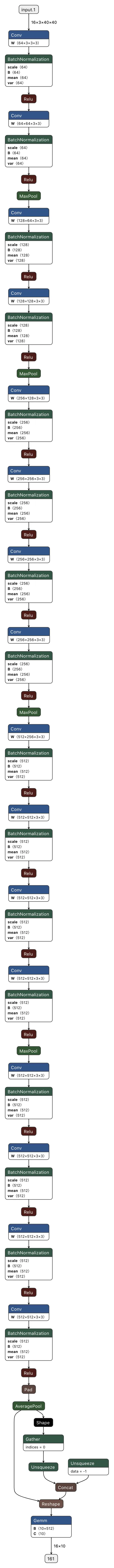

- 执行上面代码后,会调用本地浏览器打开,形式和

tensorboard差不多!

2. 实例2

from __future__ import absolute_import

import math

import torch.nn as nn

from channel_selection import channel_selection

from torch.autograd import Variable

import netron ###################

import torch.onnx ###################

__all__ = ['resnet']

"""

preactivation resnet with bottleneck design.

"""

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, cfg, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.bn1 = nn.BatchNorm2d(inplanes)

self.select = channel_selection(inplanes)

self.conv1 = nn.Conv2d(cfg[0], cfg[1], kernel_size=1, bias=False)

self.bn2 = nn.BatchNorm2d(cfg[1])

self.conv2 = nn.Conv2d(cfg[1], cfg[2], kernel_size=3, stride=stride,

padding=1, bias=False)

self.bn3 = nn.BatchNorm2d(cfg[2])

self.conv3 = nn.Conv2d(cfg[2], planes * 4, kernel_size=1, bias=False)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.bn1(x)

out = self.select(out)

out = self.relu(out)

out = self.conv1(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn3(out)

out = self.relu(out)

out = self.conv3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

return out

class resnet(nn.Module):

def __init__(self, depth=164, dataset='cifar10', cfg=None):

super(resnet, self).__init__()

assert (depth - 2) % 9 == 0, 'depth should be 9n+2'

n = (depth - 2) // 9

block = Bottleneck

if cfg is None:

# Construct config variable.

cfg = [[16, 16, 16], [64, 16, 16]*(n-1), [64, 32, 32], [128, 32, 32]*(n-1), [128, 64, 64], [256, 64, 64]*(n-1), [256]]

cfg = [item for sub_list in cfg for item in sub_list]

self.inplanes = 16

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, padding=1,

bias=False)

self.layer1 = self._make_layer(block, 16, n, cfg = cfg[0:3*n])

self.layer2 = self._make_layer(block, 32, n, cfg = cfg[3*n:6*n], stride=2)

self.layer3 = self._make_layer(block, 64, n, cfg = cfg[6*n:9*n], stride=2)

self.bn = nn.BatchNorm2d(64 * block.expansion)

self.select = channel_selection(64 * block.expansion)

self.relu = nn.ReLU(inplace=True)

self.avgpool = nn.AvgPool2d(8)

if dataset == 'cifar10':

self.fc = nn.Linear(cfg[-1], 10)

elif dataset == 'cifar100':

self.fc = nn.Linear(cfg[-1], 100)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(0.5)

m.bias.data.zero_()

def _make_layer(self, block, planes, blocks, cfg, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

)

layers = []

layers.append(block(self.inplanes, planes, cfg[0:3], stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes, cfg[3*i: 3*(i+1)]))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.layer1(x) # 32x32

x = self.layer2(x) # 16x16

x = self.layer3(x) # 8x8

x = self.bn(x)

x = self.select(x)

x = self.relu(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

if __name__ == '__main__':

net = resnet()

x = Variable(torch.FloatTensor(16, 3, 40, 40))

y = net(x)

print(y.data.shape)

onnx_path = "onnx_model_name.onnx"

torch.onnx.export(net, x, onnx_path)

netron.start(onnx_path)

3. 实例3

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from channel_selection import channel_selection

from torch.autograd import Variable

import netron ###################

import torch.onnx ###################

__all__ = ['densenet']

"""

densenet with basic block.

"""

class BasicBlock(nn.Module):

def __init__(self, inplanes, cfg, expansion=1, growthRate=12, dropRate=0):

super(BasicBlock, self).__init__()

planes = expansion * growthRate

self.bn1 = nn.BatchNorm2d(inplanes)

self.select = channel_selection(inplanes)

self.conv1 = nn.Conv2d(cfg, growthRate, kernel_size=3,

padding=1, bias=False)

self.relu = nn.ReLU(inplace=True)

self.dropRate = dropRate

def forward(self, x):

out = self.bn1(x)

out = self.select(out)

out = self.relu(out)

out = self.conv1(out)

if self.dropRate > 0:

out = F.dropout(out, p=self.dropRate, training=self.training)

out = torch.cat((x, out), 1)

return out

class Transition(nn.Module):

def __init__(self, inplanes, outplanes, cfg):

super(Transition, self).__init__()

self.bn1 = nn.BatchNorm2d(inplanes)

self.select = channel_selection(inplanes)

self.conv1 = nn.Conv2d(cfg, outplanes, kernel_size=1,

bias=False)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

out = self.bn1(x)

out = self.select(out)

out = self.relu(out)

out = self.conv1(out)

out = F.avg_pool2d(out, 2)

return out

class densenet(nn.Module):

def __init__(self, depth=40,

dropRate=0, dataset='cifar10', growthRate=12, compressionRate=1, cfg = None):

super(densenet, self).__init__()

assert (depth - 4) % 3 == 0, 'depth should be 3n+4'

n = (depth - 4) // 3

block = BasicBlock

self.growthRate = growthRate

self.dropRate = dropRate

if cfg == None:

cfg = []

start = growthRate*2

for _ in range(3):

cfg.append([start + growthRate*i for i in range(n+1)])

start += growthRate*n

cfg = [item for sub_list in cfg for item in sub_list]

assert len(cfg) == 3*n+3, 'length of config variable cfg should be 3n+3'

# self.inplanes is a global variable used across multiple

# helper functions

self.inplanes = growthRate * 2

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=3, padding=1,

bias=False)

self.dense1 = self._make_denseblock(block, n, cfg[0:n])

self.trans1 = self._make_transition(compressionRate, cfg[n])

self.dense2 = self._make_denseblock(block, n, cfg[n+1:2*n+1])

self.trans2 = self._make_transition(compressionRate, cfg[2*n+1])

self.dense3 = self._make_denseblock(block, n, cfg[2*n+2:3*n+2])

self.bn = nn.BatchNorm2d(self.inplanes)

self.select = channel_selection(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.avgpool = nn.AvgPool2d(8)

if dataset == 'cifar10':

self.fc = nn.Linear(cfg[-1], 10)

elif dataset == 'cifar100':

self.fc = nn.Linear(cfg[-1], 100)

# Weight initialization

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(0.5)

m.bias.data.zero_()

def _make_denseblock(self, block, blocks, cfg):

layers = []

assert blocks == len(cfg), 'Length of the cfg parameter is not right.'

for i in range(blocks):

# Currently we fix the expansion ratio as the default value

layers.append(block(self.inplanes, cfg = cfg[i], growthRate=self.growthRate, dropRate=self.dropRate))

self.inplanes += self.growthRate

return nn.Sequential(*layers)

def _make_transition(self, compressionRate, cfg):

# cfg is a number in this case.

inplanes = self.inplanes

outplanes = int(math.floor(self.inplanes // compressionRate))

self.inplanes = outplanes

return Transition(inplanes, outplanes, cfg)

def forward(self, x):

x = self.conv1(x)

x = self.trans1(self.dense1(x))

x = self.trans2(self.dense2(x))

x = self.dense3(x)

x = self.bn(x)

x = self.select(x)

x = self.relu(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

if __name__ == '__main__':

net = densenet()

x = Variable(torch.FloatTensor(16, 3, 40, 40))

y = net(x)

print(y.data.shape)

onnx_path = "onnx_model_name.onnx"

torch.onnx.export(net, x, onnx_path)

netron.start(onnx_path)